Learn

About Us

The university that empowers ingenuity, innovation, and entrepreneurship.

Our Academic

Offer

With our active learning methodology, our students experience engineering from day one.

Ingenuity,

dedication, and

the pursuit

of excellence

Our students' DNA: achieving highest academic achievement and personal development.

International

alliances

We've built strong relationships with the best educational institutions in the world.

Research

Experience

The right path to finding better solutions.

Sustainability UTEC

Sustainability documents

Contact:

Giancarlo Marcone

HACS DIRECTOR

gmarcone@utec.edu.pe

Learn

About Us

The university that empowers ingenuity, innovation, and entrepreneurship.

Our Academic

Offer

With our active learning methodology, our students experience engineering from day one.

Ingenuity,

dedication, and

the pursuit

of excellence

Our students' DNA: achieving highest academic achievement and personal development.

International

alliances

We've built strong relationships with the best educational institutions in the world.

Research

Experience

The right path to finding better solutions.

Sustainability UTEC

Sustainability documents

Contact:

Giancarlo Marcone

HACS DIRECTOR

gmarcone@utec.edu.pe

You are here

Eighteen years ago, Ann, then 30, suffered a brain stem stroke that left her severely paralyzed, losing control of every muscle in her body. At first she couldn't even breathe on her own. To this day, doctors still do not know the causes of stroke.

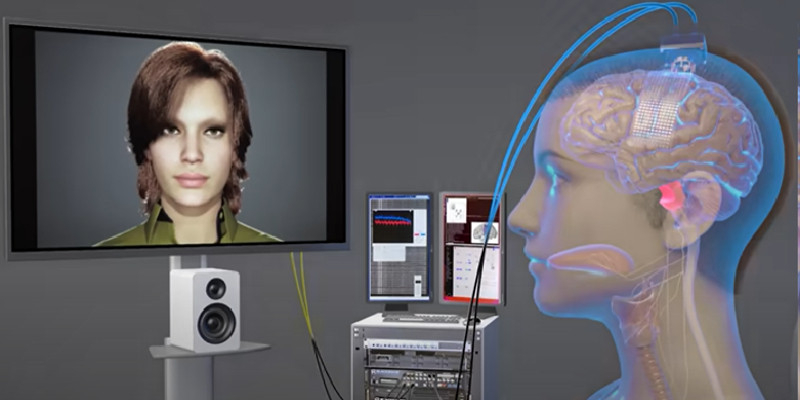

A group of researchers at the University of California led by Dr. Edward F. Chang has developed a brain-computer interface that decodes the patient's (Ann) brain signals and converts them into synthesized speech that is spoken aloud by a digital avatar. This avatar reproduces facial movements involving the jaw and lips when necessary. The avatar is also able to recreate the facial movements of joy, sadness, and surprise in order to show feelings. The system can convert brain signals to text at a speed of 78 words per minute, with an average word error rate of 25%, surpassing existing technologies. Instead of recognizing full words, the system identifies phonemes, which are the smallest units of speech, improving speed and accuracy. The system only needed to learn 39 phonemes to decipher any English word. In order to capture the signals, researchers implanted 253 array electrodes on the surface of the lateral sulcus (fissure of Rolando) of the patient's brain. These electrodes connect via a wire to a port (attached to the head), which is used to send the brain's electrical signals to computers. To create the avatar's digital voice, the team devised an artificial intelligence algorithm to recognize unique brain signals for speech. Then, researchers used the patient's voice before the injury, using a recording of her wedding speech.

This work developed by the Bioengineering departments of the University of California at Berkeley and San Francisco offers users who cannot speak the possibility of communicating almost at the same speed as healthy people and having much more fluent conversations. This is a remarkable example of how Bioengineering can help improve the quality of life of people who suffer permanent damage.

References

Metzger, S.L., Littlejohn, K.T., Silva, A.B. et al. A high-performance neuroprosthesis for speech decoding and avatar control. Nature (2023). https://doi.org/10.1038/s41586-023-06443-4

GitHub - UCSF-Chang-Lab-BRAVO/multimodal-decoding: Code associated with the paper titled "A high- performance neuroprosthesis for speech decoding and avatar control" , published in Nature in 2023.

EN UTEC VENIMOS DESARROLLANDO LA TECNOLOGÍA

Y LA INGENIERÍA QUE NECESITA EL MUNDO DEL MAÑANA

Carreras en ingeniería y tecnología que van de la mano con la investigación y la creación de soluciones tecnológicas de vanguardia, comprometidas con las necesidades sociales y la sostenibilidad.

Decide convertirte en el profesional que el mundo necesita. Estudia en UTEC y lleva tu ingenio hacia el futuro.

Déjanos tus datos y suscríbete a nuestros boletines UTEC

-

- STUDY ABROAD

- Studying in Peru

- Living in Peru

- FAQ

- Application Form

- Contact us

-

- EXECUTIVE EDUCATION

- Executive Education

- Short Courses

- Inhouse Courses

- Calendar